GenSX Overview

GenSX is a simple TypeScript framework for building complex LLM applications. It’s a workflow engine designed for building agents, chatbots, and long-running workflows. In addition, GenSX Cloud offers serverless hosting, cloud storage, and tracing and observability to build production-ready agents and workflows.

Workflows in GenSX are built by composing functional, reusable components that are composed together in plain old typescript:

const WriteBlog = gensx.Workflow(

"WriteBlog",

async ({ prompt }: WriteBlogInput) => {

const draft = await WriteDraft({ prompt });

const editedVersion = await EditDraft({ draft });

return editedVersion;

}

);

const result = await WriteBlog({ prompt: "Write a blog post about AI developer tools" });

console.log(result);Most LLM frameworks are graph oriented, inspired by popular python tools like Airflow. You express nodes, edges, and a global state object for your workflow. While graph APIs are highly expressive, they are also cumbersome:

- Building a mental model and visualizing the execution of a workflow from a node/edge builder is difficult.

- Global state makes refactoring difficult.

- All of this leads to low velocity when experimenting with and evolving your LLM workflows.

With GenSX, building LLM workflows is as simple as just writing TypeScript functions — no need for a graph DSL or special abstractions. You use regular language features like function composition, control flow, and recursion to express your logic. Your functions are just wrapped in the gensx.Workflow() and gensx.Component() higher order functions to let you take advantage of all the GenSX features. To learn more about why GenSX uses components, read Why Components?.

GenSX Cloud

GenSX Cloud provides everything you need to ship production grade agents and workflows including a serverless runtime designed for long-running workloads, cloud storage to build stateful workflows and agents, and tracing and observability.

Serverless deployments

Deploy any workflow to a hosted API endpoint with a single command. The GenSX cloud platform handles scaling, infrastructure management, and API generation automatically:

# Deploy your workflow to GenSX Cloud

$ gensx deploy ./src/workflows.tsx✔ Building workflow using Docker

✔ Generating schema

✔ Successfully built project

ℹ Using project name from gensx.yaml: support-tools

✔ Deploying project to GenSX Cloud (Project: support-tools)

✔ Successfully deployed project to GenSX Cloud

Dashboard: console/support-tools/default/workflows

Available workflows:

- ChatAgent

- TextToSQLWorkflow

- RAGWorkflow

- AnalyzeDiscordWorkflow

Project: support-tools

Environment: defaultThe platform is optimized for AI workloads with millisecond-level cold starts and support for long-running executions up to an hour.

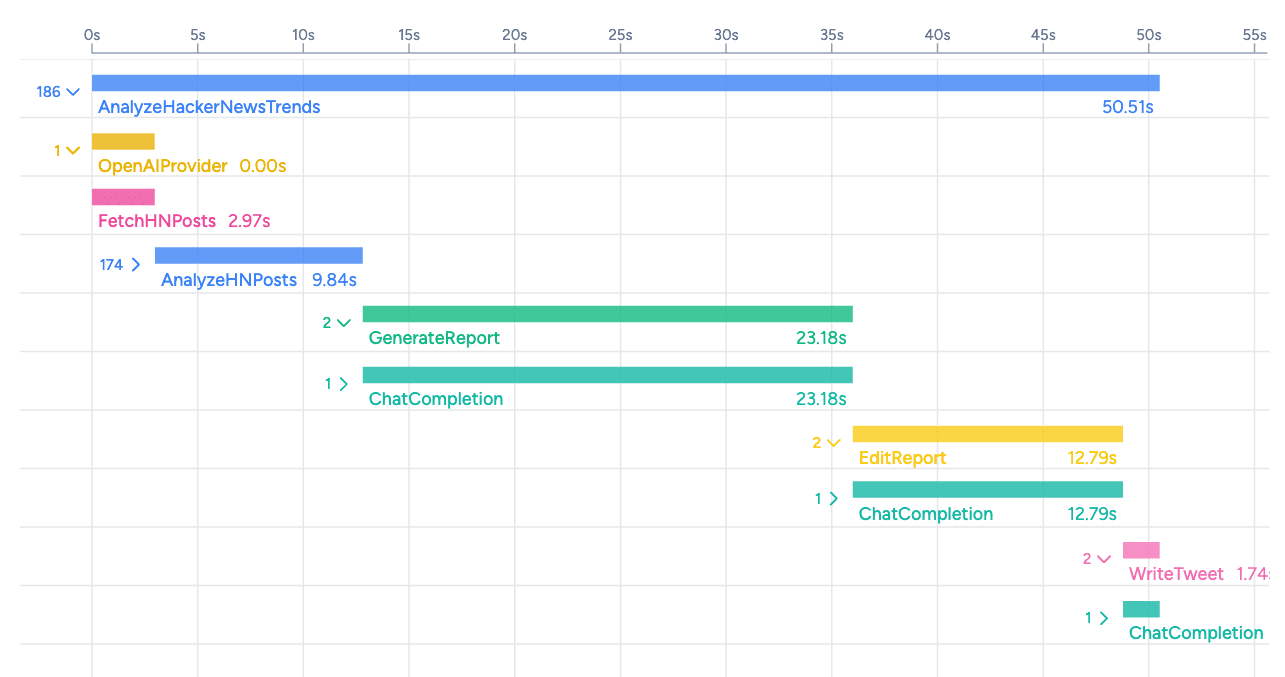

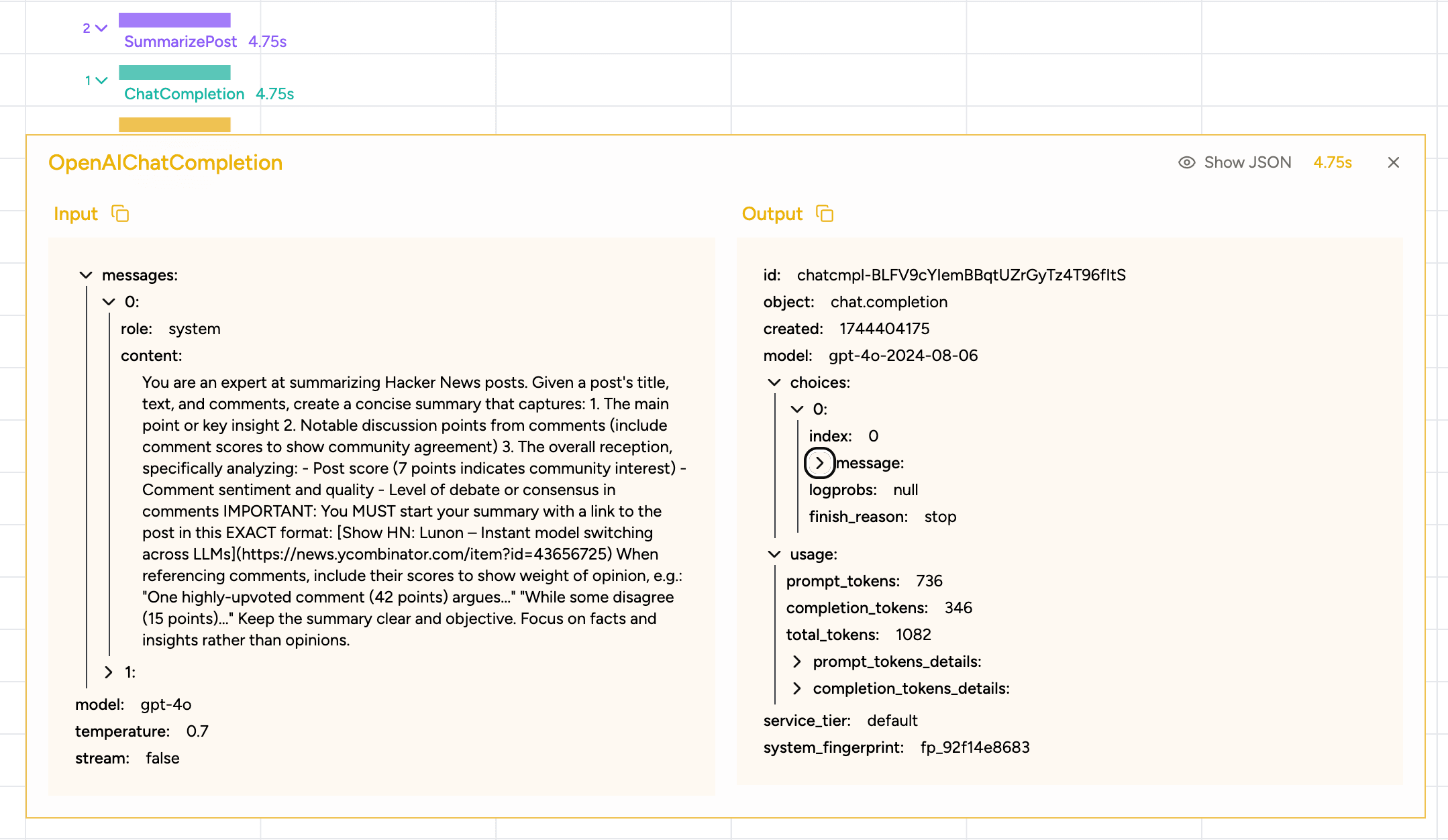

Tracing and observability

GenSX Cloud provides comprehensive tracing and observability for all your workflows and agents.

Inputs and outputs are recorded for every component that executes in your workflows, including prompts, tool calls, and token usage. This makes it easy to debug hallucinations, prompt upgrades, and monitor costs.

Cloud Storage

Build stateful AI applications with zero configuration using built-in storage primitives that provide managed blob storage, SQL databases, and full-text + vector search:

interface ChatWithMemoryInput {

userInput: string;

threadId: string;

}

const ChatWithMemory = gensx.Component(

"ChatWithMemory",

async ({ userInput, threadId }: ChatWithMemoryInput) => {

// Load chat history from blob storage

const blob = useBlob<ChatMessage[]>(`chat-history/${threadId}.json`);

const history = await blob.getJSON();

// Add new message and run LLM

// ...

// Save updated history

await blob.putJSON(updatedHistory);

return response;

},

);For more details about GenSX Cloud see the complete cloud reference.

Reusable by default

GenSX components are pure functions, depend on zero global state, and are reusable by default. Components accept inputs and return an output just like any other function.

interface ResearchTopicInput {

topic: string;

}

const ResearchTopic = gensx.Component(

"ResearchTopic",

async ({ topic }: ResearchTopicInput) => {

console.log("📚 Researching topic:", topic);

const systemPrompt = `You are a helpful assistant that researches topics...`;

const result = await openai.chat.completions.create({

model: "gpt-4.1-mini",

messages: [

{ role: "system", content: systemPrompt },

{ role: "user", content: topic },

],

});

return result.text;

},

);Because components are pure functions, they are easy to test and evaluate in isolation. This enables you to move quickly and experiment with the structure of your workflow.

A second order benefit of reusability is that components can be shared and published to package managers like npm. If you build a functional component, you can make it available to the community - something that frameworks that depend on global state preclude by default.

Composition

All GenSX components support composition through standard programming patterns. This creates a natural way to pass data between steps and organize workflows:

const WriteBlog = gensx.Workflow(

"WriteBlog",

async ({ prompt }: WriteBlogInput) => {

const research = await Research({ prompt });

const draft = await WriteDraft({ prompt, research: research.flat() });

const editedDraft = await EditDraft({ draft });

return editedDraft;

},

);There is no need for a DSL or graph API to define the structure of your workflow. Nest components within components, run components in parallel, and use loops and conditionals to create complex workflows just like you would with any other typescript program.

Visual clarity

Workflow composition reads naturally from top to bottom like a standard programming language:

const AnalyzeHackerNewsTrends = gensx.Workflow(

"AnalyzeHackerNewsTrends",

async ({ postCount }: { postCount: number }) => {

const stories = await FetchHNPosts({ limit: postCount });

const { analyses } = await AnalyzeHNPosts({ stories });

const report = await GenerateReport({ analyses });

const editedReport = await EditReport({ content: report });

const tweet = await WriteTweet({

context: editedReport,

prompt: "Summarize the HN trends in a tweet",

});

return { report: editedReport, tweet };

},

);Contrast this with graph APIs, where you need to build a mental model of the workflow from a node/edge builder:

const graph = new Graph()

.addNode("fetchHNPosts", fetchHNPosts)

.addNode("analyzeHNPosts", analyzePosts)

.addNode("generateReport", generateReport)

.addNode("editReport", editReport)

.addNode("writeTweet", writeTweet);

graph

.addEdge(START, "fetchHNPosts")

.addEdge("fetchHNPosts", "analyzeHNPosts")

.addEdge("analyzeHNPosts", "generateReport")

.addEdge("generateReport", "editReport")

.addEdge("editReport", "writeTweet")

.addEdge("writeTweet", END);Using components to compose workflows makes dependencies explicit, and it is easy to see the data flow between steps. No graph DSL required.

Designed for velocity

The GenSX programming model is optimized for speed of iteration in the long-run.

The typical journey building an LLM application looks like:

- Ship a prototype that uses a single LLM prompt.

- Add in evals to measure progress.

- Add in external context via RAG.

- Break tasks down into smaller discrete LLM calls chained together to improve quality.

- Add advanced patterns like memory and self-reflection.

Experimentation speed depends on the ability to refactor, rearrange, and inject new steps. In our experience, this is something that frameworks that revolve around global state and a graph model slow down.

The functional component model in GenSX support an iterative loop that is fast on day one through day 1000.